This is not the future we were promised.

Sure, the science fiction writers overestimated our rate of technological development many times in the past. We never got flying cars in the 1980s, a space odyssey in 2001, or a Lunar colony in 2018 (per Babylon 5).

Those same writers imagined all kinds of intelligent machines doing all sorts of things. The machines might take over the drudgery of menial labor and usher in a utopia, or rise up in an all-out war against their human masters. Despite such a wide array of options, very few of these stories dealt with a scenario where AIs would paint, write, and compose, stealing creative jobs from human artists. This may not be Skynet-level bad, but it is not okay, right?

Of course, the statement “AI creates art and steals jobs from artists” is wildly inaccurate and contains two and a half fallacies.

The first fallacy is the use of the term ‘AI.’ Coined in the 1950s, Artificial Intelligence is something we haven’t come close to achieving, and while AI is currently the hottest Silicon Valley buzzword, it doesn’t accurately describe the technologies involved. Midjourney, DALL-E, ChatGPT, Bard, and similar tools are powered by what are called LLMs—large language models that run on neural networks. Basically they catalog and label enormous amounts of data and then evaluate this data very quickly using an artificial process modeled after the synapses in a biological brain. Sounds complicated? It is, which is why we so readily default to the simple-but-erroneous catchall of ‘AI.’ Nothing that approximates human-level intelligence is involved. There’s just data processing at an industrial scale, and the more data you feed into such a model, the better results it is likely to spit out.

The second fallacy is that these programs create anything. Their job is to generate text or an image that they judge to be the closest mathematical match to whatever query they’re given. They approximate a response, and the outcome is best described by Ted Chiang, who called ChatGPT “a blurry JPEG of the web.” Since these LLMs are only concerned with producing what looks like a proper response, they have no problem whatsoever with mixing reasonable-sounding falsehoods in among the facts. On a philosophical level, one might argue that making up facts is indeed a creative process, much like telling a story. On a practical level, if I wanted bald-faced lies proffered to me as facts with the overconfidence of a coddled teenager, there are plenty of pundits and TV channels I could tune in to for that.

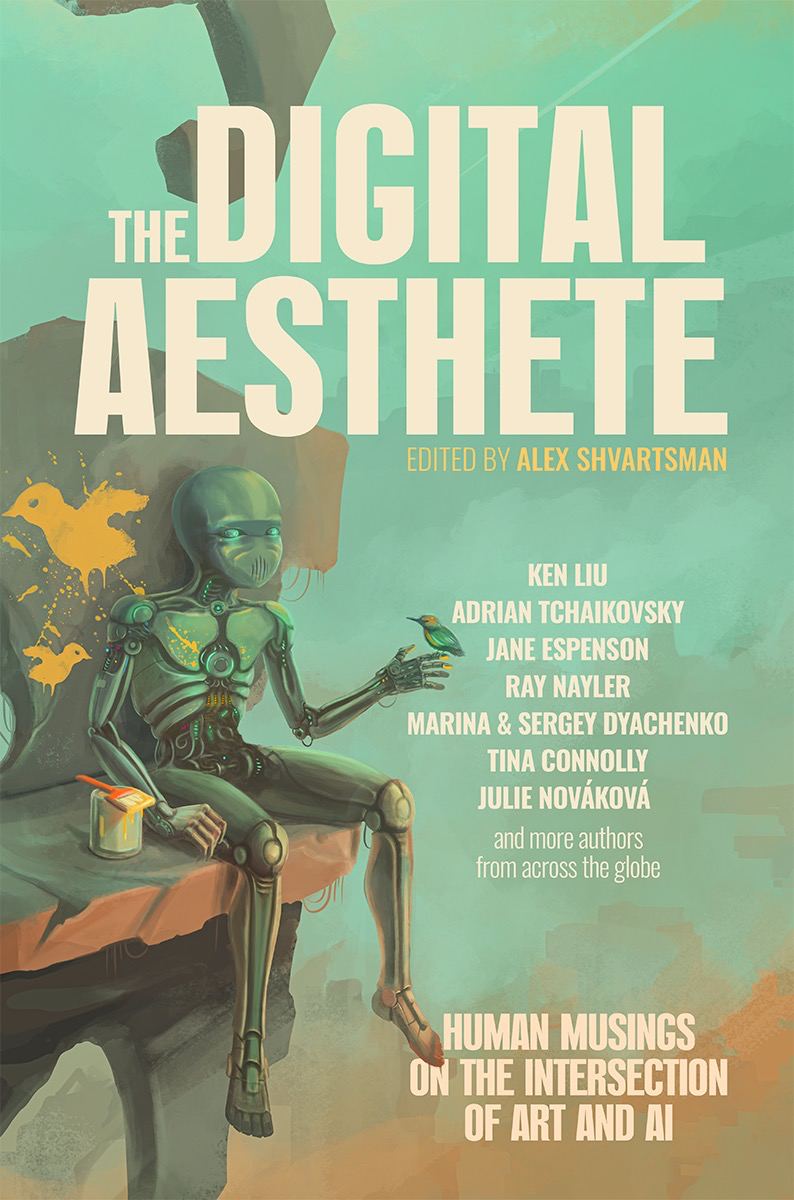

I asked Bard and ChatGPT for my own bio. “He attended the University of Pennsylvania, where he studied English and Russian,” lied Bard. It added, “Shvartsman has also written two fantasy novels, Eridani’s Crown (2019) and The Digital Aesthete (2023).” Yes, this science fiction anthology you’re reading now. The irony is delicious.

ChatGPT has a different yet equally erroneous idea about my education. “He holds a master’s degree in computer science from the Brooklyn College of the City University of New York.” It then goes on to pad my resume a lot more than Bard does: “He has been a finalist for the Hugo Award, the Nebula Award, and the World Fantasy Award.” (From your twisted database to God’s ears, Chatbot.) And, improbably: “Shvartsman has been involved in organizing and running science fiction conventions. He has served as a program coordinator and panelist at various conventions, including Boskone and Capclave.” This should come as a surprise to the good folks who run those conventions—and especially Boskone, which I’ve never even attended.

I have been a panelist at many a Capclave. There are plenty of accurate facts parsed from Wikipedia and elsewhere in both iterations of my bio. To any person who isn’t an expert on SF/F awards and publications, the bio will look reasonable and authoritative, which is the point, and also the danger of accepting LLM-generated content at face value.

But while lying may in fact be a creative process, making up things is not enough to tell a compelling story. Any fiction which LLMs are capable of generating as of mid-2023 is bland and uninspired at best. To paraphrase Chiang, it is a blurry image of what a story should look like. It has no soul, contains no gorgeous prose capable of delighting the reader and keeping them up late at night to read just one more chapter.

Chiang likens the quality of LLM-generated prose to a first draft: “Some might say that the output of large language models doesn’t look all that different from a human writer’s first draft, but, again, I think this is a superficial resemblance. Your first draft isn’t an unoriginal idea expressed clearly; it’s an original idea expressed poorly, and it is accompanied by your amorphous dissatisfaction, your awareness of the distance between what it says and what you want it to say.” I say he’s being generous. A good writer’s first draft isn’t usually milquetoast.

A plot generated by a neural network tends to be formulaic, inoffensive, and often saccharine. It goes out of its way not to challenge the reader or the reader’s worldviews, much like the Feelies in Huxley’s Brave New World. Except the Feelies were capable of drawing in the viewer, even if they were heavy-handed in manipulating that viewer’s reactions and emotions.

We’ve all seen beautiful pictures from Midjourney, presently the undisputed leader among several image-generating programs. It appears the LLMs are a lot more adept at generating visual art than text. But are they really? Artists and software engineers will both tell you they’re far from perfect. Even those images that do not have obvious issues, such as too many fingers on a character’s hand or a building melting into the ground, may still suffer from poor perspective, characters staring into nothing, and other such imperfections. As explained by AR/VR engineer and award-winning author Kimberly Unger, “More people have a grounding in the structure of language, even though they may have forgot the specifics, then have a grounding in the structure of visuals. So to your average observer, it’s easier for them to understand, often unconsciously, where the text goes wrong, than it is for them to understand where the pictures go wrong.”

Except, some images turn out better than others. And since the program can generate them endlessly, determined individuals are able to get the results they want. Prompt engineering is a skill set that allows people to hone their queries to produce results other humans are likely to judge more pleasing. Some prompt engineers claim this makes them artists, and while I find this claim to be a stretch, I do think that, at least at some level, this makes them curators in the same way a magazine editor reviews hundreds of story submissions to select one or two they’d like to publish.

Which brings us to the remaining half-fallacy (and please indulge me for the moment on the ‘half’ part): LLMs steal jobs from artists.

Commercial artists are among those affected the most. So many LLM-generated images are being used in creating book covers, interior illustrations, and other graphics, it is impossible to argue that artists are not already being hit in the pocketbook.

Generative text may not yet be good enough to replace writers and translators of fiction—yet being the key word. But we’re already seeing a lot of copy writing and copy editing work being shifted to a light and poorly-compensated revision process of whatever an LLM had generated in seconds.

And then there’s the big one: much of the data fed into the large language models isn’t in the public domain. LLMs regurgitate art, books, articles, and other copyrighted material, so much so that critics have taken to calling them “plagiarism machines.” Those faux bios of mine had lines lifted verbatim from online sources. Anyone relying on a chatbot to generate text for them can’t be certain they won’t end up with an unattributed direct quote. But even if they don’t, a case can be made that tiny bits of creative work are being lifted from individual creators, like a bank heist where a penny is stolen from every account to net the thief millions.

Proponents of the technology argue that neural nets “learn” in the same way the human brain does. I read Bradbury and Bulgakov and Brackett growing up, and each author is a little bit responsible for whatever writing style I eventually developed. The same holds true for painters and composers and cinematographers—none of us create in a vacuum. But whatever philosophical similarities may exist, there are legal distinctions, and those distinctions are already being tested. On June 28, 2023, writers Monda Awad and Paul Tremblay filed a lawsuit against OpenAI, the company behind ChatGPT, claiming that their copyrighted books were being used to train the LLM without their consent. Days later, similar lawsuits against OpenAI and Meta were filed by Sarah Silverman, Christopher Golden, and Richard Kadrey.

At some point laws will likely be passed that govern what companies can and cannot do when training their datasets. But as history teaches us, it’s nearly impossible to stuff the genie of new technology back into the bottle. The laws will likely be different across borders, and we may see companies relocate their generative bots to friendlier jurisdictions. Individuals will be able to shop around, opting for their preference of ethical models trained on public domain and opt-in material or models based in countries whose courts decide harvesting copyrighted material in this fashion is permissible. Japan has already taken an early pro-AI stance; minister of education, science, and technology Keiko Nagaoka stated that using any information in training datasets does not violate copyright laws in her country.

A much more effective strategy is social pressure. A lot of people don’t want the AI art. Many science fiction magazines prohibit people from sending in AI-assisted submissions. Major publishers have suffered backlash over using bits of AI-generated art in lieu of stock art. If you and I are willing to vote against such art-flavored byproducts with our dollars, then surely that can act as some sort of a check on their use?

This is where the half-fallacy comes in. What we call AI is already stealing some creative jobs, maybe enough to voice concern but not enough to put us out to pasture … yet.

The technology behind these generative engines is improving fast. Noticeable upgrades happen in a matter of weeks and months, not years. The JPEG of the web is gaining resolution and becoming a little less blurry with every iteration. Even now, it can sometimes be difficult to confirm whether a piece of digital art is human-made or generated. A major magazine had to recently pull down an issue cover because they believed it to be AI-generated or assisted, but the artwork was good enough for them to have selected it in the first place. So what happens when a sufficiently advanced painting, poem, or story is indistinguishable from human ingenuity?

This is an existential threat and a terrifying concept to many. Others think this scenario will not be quite so terrible. They either see these tools as little different from Photoshop, or imagine exciting ways to collaborate with the machines to create something new and wonderful.

In his June 2023 Slate article, Ken Liu argues that a flood of generated content won’t significantly worsen the equation for most authors since there are already way more books published daily than anyone could ever hope to read. “I can’t see an A.I. succeeding in the market simply by writing ‘better’ books faster. The book market is much too inefficient and ridiculous to be any kind of capitalist ‘meritocracy.’”

Others see this as an inevitable and acceptable sacrifice in our never-ending march of technological progress. We always lose jobs and replace them with new ones. How many fletchers, chimney sweeps, or coachmen are there in today’s society? Except art is not merely work. It’s different from giving up coal mining or horse carriages in favor of better technologies. Many of us consider it to be an inalienable human birthright.

Will we feel differently if and when a true artificial intelligence is developed and it is able to create art and not merely generate a mathematical approximation of it? After all, we would surely not hold the same bias against art by aliens or uplifted animals. These are difficult questions, and even the years of reading science fiction may not have sufficiently prepared us to come up with any sort of definitive answers.

It is not the future we were promised; what happens next may be terrible or spectacular, or both, but surely it will be fascinating. And so we do what writers have always done: we pour our anxieties and hopes about all of this onto the page.

Read the entire anthology now in ebook or print formats:

https://amzn.to/3MEG0RK